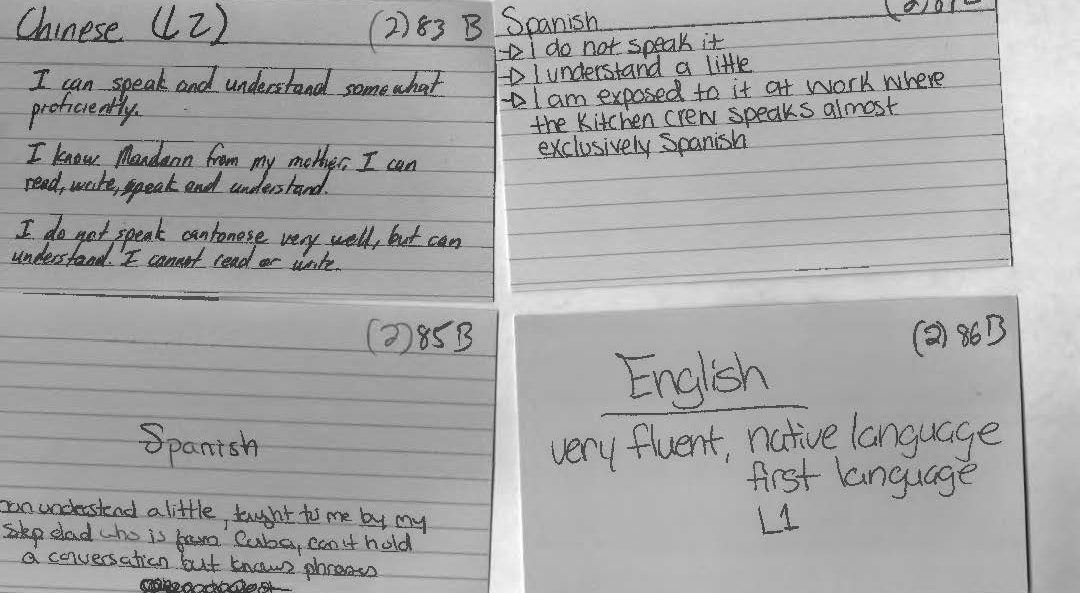

One of the biggest challenges in working with qualitative data such as the very self-directed and open ended responses that our participants provided, is interpreting said statements in a way that generates useful data. I have come to observe that in this particular study, the relatively vague direction prompt that was used when administering the survey (something to the effect of “make a statement about each language that you’re aware of”) yielded responses that were either very informative or very (very) vague. Because we asked participants to hand write their responses on index cards, as opposed to having someone else interview and record their answers, or having them use a digital answer form (like the one found elsewhere on this blog), we also had to contend with some instances of unclear or illegible handwriting. Though deciphering somebody’s handwriting ranks relatively low on the scale of challenges that crop up with qualitative research, it can be nonetheless frustrating.

Data

Language Clouds, literally!

Fooling around with Tableau, I found this cool feature that literally creates clouds! Take a look at this.

Where the Blog is at the Moment

The picture of my desk above illustrates the main issue that we had for the blog during the summer of 2017.

When we all met for our summer meeting, the main problem we had was that we either couldn’t access our Google Drive to get our information or couldn’t connect to the wifi. So to get around not being able to connect to the wifi, Laura suggested that she could get the data from her laptop since Prof. Quizon couldn’t access the drive. However, another problem came up. The laptops we use for this blog is either our personal computers or the laptops the school provides. To log into the laptop the school provides, you need to log into your student email and to do that, you need to have wifi access. But for some odd reason, Laura’s laptop could not recognize the campus wifi.

After finally being able to connect to the wifi and getting all the data we needed, we all discussed issues that came up at that point.

One of the main issues, besides connecting to the internet and getting our data, was how to code some of our data into the excel because all our data was qualitative data. What we decided to do and how we did in detail it is on a different post but it all came down to figuring out how to categorize something into something else.

The second issue, which is something more personal to me than what it is for the others, is how being an alumni affects the productivity of the blog and internship. One of the main issues is just getting onto the blog because we all use our student emails to log in. Not being a student anymore complicates things. The quick fix was to switch to my personal email and then relinquish admin rights after I hand over to the next group.

The final issue touches the first issue but in more detail. It had to do with how to categorize something that doesn’t have a category. For example, how would you categorize learning a language from a hymn or song? Would you say the person can speak and recognize it but not understand it? This issue was brought up by Stephen when he realized that some students who took the survey said they can sing and recognize a language but not actually read or understand it.

The easiest and fastest way we decided to address this problem is just to make a special category for these cases since it only affected about five or six entires. After going through all our issues and trying to figure out a way around them, we all had pizza and left to enjoy the July weather.

My Opinion: The Best and Worst Functions of Excel for this Project

When creating our database, we had to input a large amount of information into each column for each index card. In this, I love the simple yet amazing ability to freeze the first row of the spreadsheet. Of course, the same can be done for columns.Whether we were on index card 2, 20, or 120, we could clearly see the column title of what type of information we were inputting.

Another function of excel that was awesome was the use of pivot tables. Pivot tables allowed us to quickly sort and count our data to give us an idea of what our data would look like once uploaded for data visualization. For instance, with a pivot table we could see how many speakers were attributed to each language. We could also see who input what data, and sort by what type of information. For example, if one of the team member had clacked on my name, they could see how many cards I input were English. However, we decided not to keep it as part of our data set as the external visualization program we used allowed us to see the same information when we uploaded our data, even allowing clickable charts, maps, etc.

A final function that was greatly appreciated was the ability of an Excel spreadsheet to be uploaded onto Google drive, shared, then downloaded as an Excel file. This helped greatly, as the team felt most comfortable with Excel over the Google spreadsheet. Though I’m not sure if this should be attributed to Google or Microsoft (or both), this was none the less a great function.

But with the best, also comes the worst…

The biggest problem for me when starting this project was using qualitative data as opposed to quantitative data. When I had previously learned how to use an earlier version of Microsoft Excel early in high school, we worked with quantitative data and functions. In that, I found it a bit challenging in the beginning to just be putting in names and words instead of mathematical problems and functions. However, I was surprised to find that when working in a column, excel will pop up with a cell fill-in for a word previously used. So say I was typing in the last name ‘Smith’ for a second or fifth time, I would have only typed up to the ‘m’ and excel would suggest “Smith” to put into the cell.

Where this turns sour for me is that if you skip a cell down and start typing into the second cell underneath, it no longer has the fill in as an option. I REALLY wish that this carried over while in the same column. When it came to really long or odd names, I really wished that excel would still automatically suggest a word fill in, even when you skip the cell of the next row.

When trying to visualize our data, we ran into a problem. Where we had input just countries or regions (i.e. Atlantic Midland, Inland North, etc.) as the language’s origin, the visualization technology we were using could not figure out how to map the languages with just the country. In that, we had to go back and put in the capital of each country of the languages origin, and designate a ‘capital’ for different types of English (i.e. North Jersey vs. South Jersey English), which resulted in a more accurate depiction of the locations of each language origin. Overall, I wish that Microsoft Excel would improve on it’s compatibility with other software and websites. Though I understand there’s much time, thought, and agreement that needs to be done for this, companies like Amazon and Paypal work with other websites and services to create a smoother use of services. Therefore, Microsoft does have the ability to work better with other companies’ programs, and I wish that both parties would work to do so in the near future.

Both of the above images do not belong to me. ‘Spirited Away’ is the property of Studio Ghibli/Disney and were found here: giphy.com/search/spirited-away-gif

The Best and Worst of Viewshare

Viewshare was pretty awesome the first time around. The best part of it was the map that was auto created. Not only did it show the plotted points but by hovering over the points you can see more specific things (such as which person reported speaking a language) and by clicking on that person’s randomized ID number, you could see all the details of their entry.

However, the amazement soon fell away after I took down the data to make adjustments. The second time around, I could not re-upload the files. We lost all the visuals from the first data set and we could not recover it. According to the site, the file was corrupted. Luckily the second data set went up with only a few minor problems; the map was generated and any other issues, I was able to fix quickly.

The second time around, I could not re-upload the files. We lost all the visuals from the first data set and we could not recover it. According to the site, the file was corrupted. Luckily the second data set went up with only a few minor problems; the map was generated and any other issues, I was able to fix quickly.

Overall Viewshare is not too bad; it could be even better if you are using smaller and simpler data sets. The map and charts, when functioning properly, are very user friendly and interactive which was our main goal when presenting our data.

smaller and simpler data sets. The map and charts, when functioning properly, are very user friendly and interactive which was our main goal when presenting our data.

Using Viewshare

ViewShare is a website in which people can input a selection of data, like an excel chart, and the program will allow the person to create different charts, maps, lists and timelines, depending on what kind of information the program can read from the data. Professor Quizon set up the account and I, Ellie Hautz, explored it’s features with a mock Microsoft Excel spreadsheet to see how we could use ViewShare in our research. We all worked on coding the information onto the Microsoft Excel file. I took the final version and uploaded it to ViewShare to see what I could do with it. I was so excited to see the amount of charts I could make with it. I looked at the map portion and it had plotted points that I had not intended it to. For instance our version of New York was specifying New York, NY. However, the program read it as New York in the United Kingdom. So I thought that maybe putting in coordinates would plot easily. I then had a discussion with the group to decided what coordinates we were going to use. We decided that for everyone using North New Jersey English would be based on Bergen County and South New Jersey English would be Cape May County since they were the most coastal north and the most coastal south.The majority of our participants were from New Jersey. However, a good deal of participants indicated other states our countries. For plotting these, we decided to use the capital of the state or country origin of the language unless otherwise specified by the participant. So I made an extra section of my own Microsoft Excel spreadsheet with the coordinates for these areas, however, it still was not working properly. I looked closely and the program asked for the city and state and/or country of each data point. So I went through again and used the capital of every county, state, and country. Finally it worked and the map plotted correctly.

Unfortunately, after a week or so of the corrected data, ViewShare stopped being compatible with our first set of data.

Place names: New Jersey/New York to global speech communities

Our team member Ellie Hautz was tasked with figuring out how to map place names that were provided as regional descriptors for a language. In a sense, individuals were identifying where a particular speech community lives, either as a result of their own lived experience or inferred from information shared by others. The map that this query generates is quite distinct: using Viewshare we see a wider and richer distribution, encompassing both the perceived geographical origins of a language but also the location of speech communities as witnessed or inferred by our respondents.

One of the most interesting decisions made by the team was how to capture the high incidence of descriptors for New Jersey and New York varieties of English. Viewshare required specific latitude/longitude codes in order to generate a map. As a New Jersey-based university, our team felt obliged to step up to the richness of the local data before us. After exploring various strategies, we decided to adapt principles used in Rick Aschmann’s American Dialects website. Like Aschmann’s site as well as the broader literature on English dispersal in the US, we used the Eastern seaboard as starting point.  However, to graphically capture what our respondents refer to as “northern NJ,” we decided to map it onto New Jersey’s northernmost seaside county (Bergen) with the capital city of Hackensack. Similarly, verbatim descriptors of “southern NJ” were mapped onto the southernmost seaside county (Cape May) with the same-named capital city. Aschmann used slightly different terms, however, because he was plotting nationally beyond a single state. For purposes of this specific data set, what appears elsewhere as “Inland North” was coded as “Northern NJ”; what is referred to elsewhere as Atlantic Midland is what was coded as “Southern NJ.” Whenever New York was mentioned, the variety descriptor “Greater New York City” was used.

However, to graphically capture what our respondents refer to as “northern NJ,” we decided to map it onto New Jersey’s northernmost seaside county (Bergen) with the capital city of Hackensack. Similarly, verbatim descriptors of “southern NJ” were mapped onto the southernmost seaside county (Cape May) with the same-named capital city. Aschmann used slightly different terms, however, because he was plotting nationally beyond a single state. For purposes of this specific data set, what appears elsewhere as “Inland North” was coded as “Northern NJ”; what is referred to elsewhere as Atlantic Midland is what was coded as “Southern NJ.” Whenever New York was mentioned, the variety descriptor “Greater New York City” was used.

Interestingly, our college campus is located in Essex County, NJ located in a geographical region that falls somewhere at the cusp of the language varieties of Northern NJ/Inland North as well as Greater New York City.

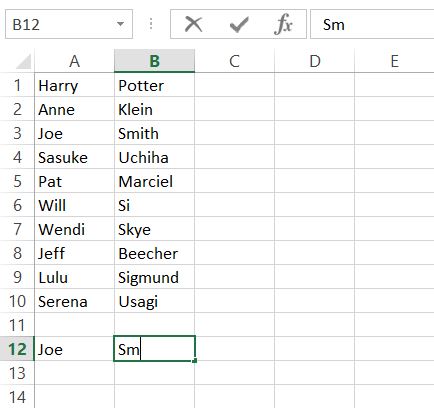

Microsoft Excel: Randomized Number I.D. for Participants

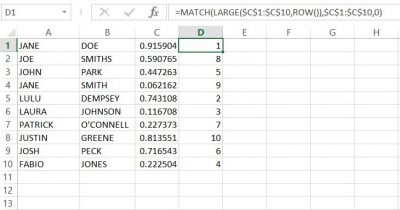

In order to present our data by the participant, the ethical thing was to avoid revealing the actual name of the individuals who gave us our data. In this, we used Microsoft Excel to generate and assign random numbers, rather than simply numbering every subject individually. These numbers would then act as the I.D.’s for each participant. On a separate spreadsheet, we put participants first and last names in columns ‘A’ and ‘B’ respectively (here I have put in ten fake names* to show you an example). For our data, we had a list of all the participants’ names in alphabetical order by last name.

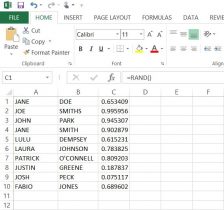

Next, I used the RAND, or random function. By putting the =RAND() function into column ‘C’ from cells C1 to C10, we were given a random decimal number. Then, I had tried to use the =RANDBETWEEN function in column ‘D’, inputting =RANDBETWEEN(1,10). Although this gave us a random whole number between 1 and 10, there were repeats of the same number. So now one of the biggest problems was finding a way to have excel create random intergers that did NOT repeat.

Finally, with a little help from the library and the internet, I used the following formula to generate NON-REPEATING whole numbers in column ‘D’;

=MATCH(LARGE($C$1:$C$20,ROW()),$C$1:$C$20,0)

The result was what we were looking for, anonymity for our participants. With this success, we copied and pasted the numbers next to the names in the list of participants in our data set.**

*None of these names are meant to have any relation to any person(s) alive or deceased.

**When I input the function into column ‘D’, the random values in column ‘C’ changed automatically, but remained random. you need to keep this formula in this column in order for the function in ‘D’ to work.

There may be other ways of achieving the same outcome, but this formula worked best in excel.

Microsoft Excel vs. Google Sheets: Which One Did We Choose?

Initially, we were going to use Google’s spreadsheet because we could all edit it in one place, but we encountered a few problems. Some of the data in the Microsoft Excel spreadsheet when opened in the Google spreadsheet would overlap into other columns, making it hard to read. Additionally, there would be the occasion where data that was present in the Excel sheet was missing in Google’s spreadsheet. As another point, we all had the same version (2013) of Microsoft Excel pre-downloaded on our laptops which made Microsoft Excel compatibility easy. It was unanimously decided that we use Microsoft Excel to input data. However, we also decided to use Google Drive to save and share our data on a cloud. Google Drive also updated us via email anytime one of us contributed to our shared folder.

We created three folders in google docs to organize our saved spreadsheets and other files. These three were ‘1st DH Raw Data’, ‘2nd DH Raw Data’, and ‘DH Meeting Docs’. The third folder held our meeting minutes, or what our discussions were when we met and what goals we discussed to have done before we next met. Both the first and second raw data folders had sub folders of ‘checked’ and ‘unchecked’, where the previously naming convention came in handy. Additionally, both raw data set folders had their respective index card scanned copies were saved there. In doing this, we kept all files organized well and were able to share files efficiently. Although we all saved the most recent files to our desktops and to a shared USB drive for backup, Google Drive assured that our updated and previous files were in one place that we could all access from any computer.

Our Naming Convention and Communication

All data we entered had to not only be divided evenly among the team, but it also needed to be checked to make sure that the information is correct and who had last saved the data. We agreed to use an author naming convention by using our initials. In the Microsoft Excel spreadsheets, we designated four additional columns for this purpose, and two more columns were added to communicate on the spreadsheet itself. Columns D,E, R, S, T, and U were used for the following: D was ‘Entered by CQ/MP/AB/EH’, E was ‘Date Entered’, R was ‘Comments’, S was ‘Checked by CQ/MP/AB/EH’, T was ‘Date Checked’, and U was ‘Additional Notes’. The ‘Comments’ column was used to communicate changes to data. Say I had entered a name wrong as ‘McThomas’, but Michelle caught the mistake, and would write in that row under column R ‘MP-AB fixed last name to MacThomas’. This tells us that Michelle is writing to Anastasia (me) that she fixed the error in the last name I made. If we had a question or were not sure of a data entry or part of one, we would write in column R as well. For example, if Ellie had a question about a missing name, she could write in the ‘Comments’ column ‘Which participant is this?’ or simply state ‘No name given’.

The initial convention was used not only to show who input and checked the data, but also who had last saved the data. To give an example, if I was the first to put in data, I would label the newly saved excel sheet ‘AB Raw Data Set 1’. If Ellie was the next to input her data and check mine, the new excel sheet would be titled and saved as ‘AB-EH Raw Data Set 1’. Then, if Michelle were to do the same, the file would be saved as ‘AB-EH-MP Raw Data Set 1’. This naming method would continue until all data is input and checked.

Overall, this system of using our initials to know who last saved, checked, and input data worked very well. It was a simple, clear way to know among the team who had last saved the most recent data and who was communicating with who within the spreadsheet, especially between meetings.

The Origin of the Project

In the Fall 2015 Linguistic Anthropology class taught by Dr. Quizon, students were asked to share information about any and all languages that they knew. She gave out note cards and instructed the class to write down one language per card. Underneath the name of the language, they were asked to write down anything they wished to say about this language. They used descriptors of their own design making these cards rich with open-ended qualitative data. On the reverse of each card, they were asked to write their names.

With support from Seton Hall’s Digital Humanities Fellowship initiative, Dr. Quizon and three student interns who completed the course in the previous semester took a closer look at this data and explored ways to visualize the information. Were there intriguing or interactive ways to plot linguistic information? Could the data be mapped? Were there patterns to be discovered when expressed in visual form?

The class of 35 students was surveyed twice: once in the beginning of the semester, and again towards the end of the semester. The Language Maps, Language Clouds research team took these two sets of note cards, devised ways to capture, organize and analyze the information using linguistic concepts, explored ways to visualize the results of our queries, and aimed to share our findings online. Our goal is to share both processes and results as we seek to deepen our understanding of the data an interesting, interactive setting.

Even though we all participated in every aspect of the project, we each had an area of expertise. Ellie learned how to use and troubleshoot Viewshare and later, with Dr. Quizon, explored Tableau. She worked with Anastasia who was in charge of Excel and added knowledge of its features as needed for the project. I was in charge of learning how to build a blog on WordPress.