There are a number of different ways to “partition” the number 7 into other numbers. Writing them all out:

7 = 1 + 1 + 1 + 1 + 1 + 1 + 1

7 = 2 + 1 + 1 + 1 + 1 + 1

7 = 2 + 2 + 1 + 1 + 1

7 = 3 + 1 + 1 + 1 + 1

7 = 4 + 1 + 1 + 1

7 = 3 + 2 + 1 + 1

7 = 5 + 1 + 1

7 = 4 + 2 + 1

7 = 3 + 3 + 1

7 = 2 + 2 + 2 + 1

7 = 3 + 2 + 2

7 = 6 + 1

7 = 5 + 2

7 = 4 + 3

7 = 7

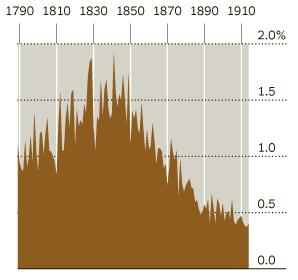

There’s 15 ways listed above, and so the “partition number” of 7 is 15. Mathematicians typically write this as P(7) = 15, and have been studying P(n) for other n for hundreds of years now. The fact that this partition function P(n) has been studied for hundreds of years should be a clue that it’s been difficult to get a handle on. For one thing, it grows absurdly fast: P(10) is already up to 42, and P(100) = 190,569,292. I am certainly not writing all those down! More importantly, those numbers I just quoted were not computed using any neat little formula. Up til now the only way of computing P(n) when n got large involved calculating—by hand or computer—all the other partition numbers that came before. For example, calculating P(100) required calculating each of P(1) to P(99).

All that has changed, however, thanks to a breakthrough made by Ken Ono and Zachary Kent during a hike in the woods. “The problem for a theoretical mathematician is you can observe some patterns, but how do you know these patterns go on forever? We were, frankly, completely stuck. We were stumped,” Ono says in a brief interview (below) about the days leading up to their realization.

During a break from their day-to-day duties at Emory University, on a nature hike to Tallulah Falls, “we realized that the process by which these numbers fold over on themselves was very much like what you see in the woods.”

It was as simple as that. Maybe we could translate the problem of studying the partition numbers, this difficult problem of why do they fold over on themselves, maybe we could turn this into a problem where: what if we were just walking among the partition numbers, from one partition number to the next and then to the next? Could we turn the idea of a switchback into a concrete mathematical structure which we could prove had to occur over and over again? We realized that, if we could do that, then our fractal structure would have to be true and instead of having a walk that ends at the falls, our walk would just go on forever.”

The switchback phenomenon that Ono, Kent, and Amanda Folsum at Yale University detected and mathematized shows that the partition numbers have a fractal-like structure that was completely unexpected, and (with Jan Bruinier of the Technical University of Darmstadt in Germany) also produced the first explicit formula for the P(n) function. Their news was picked up by Wired, Scientific American, the Smithsonian magazine, and online at the Free Republic of all places. The “fractal-like” nature of the solution also let a number of those outlets seize the opportunity to link to media of psychedelic fractal patterns like the Mandelbrot set. I’m not above that either, and so feel free to check out the awesome “zoom-in” on the Mandelbrot set that heads this entry!