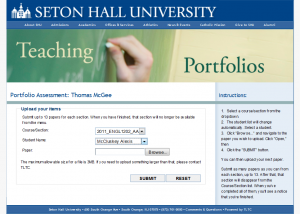

Beginning with a data set that includes all the faculty, all the classes, and all the registered students for those classes, when a faculty member first logs in s/he selects their class section. The dropdown menu beneath is immediately populated with the roster for that class. Then selecting a student, the faculty selects and uploads a writing sample for that student.

Each professor is expected to upload between 8-13 samples for each of their sections.

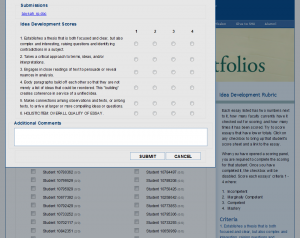

In the second phase, faculty evaluate the samples. A randomized grid is presented that just shows the student ID numbers, as well as how many times that student has been scored as well as how many faculty are currently working on it. The ideal is to score each student several times to get a fair average. In order to prevent the bias of everyone picking one of the same few students at the top of the screen, each iteration of the screen brings up the names in random order, and every sixty seconds the screen is redrawn in a new order.When a box is selected, the number is greyed back and the counts are incremented.

The popup panel has a link to the essay, and the scoring rubric and grid. Faculty are strongly encouraged to complete a scoring once started, and various popup warnings help to enforce it.The system is now in its second full year of use, and has proven to be a valuable aid for end-of-year assessment.