Newsom Blocks AI Safety Bill

Sheamus Finnegan

Staff Writer

On September 29th, California Governor Gavin Newsom, whose state is home to over sixty percent of the world’s leading generative artificial intelligence companies, vetoed the controversial California Senate Bill No. 1047. The bill aimed to establish oversight of and regulations on the development of emerging artificial intelligence (AI) models.

Introduction

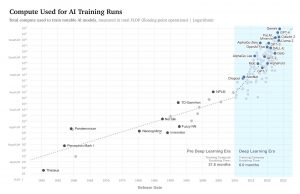

Now more than ever, it is clear that artificial intelligence is the future. Since the release of ChatGPT in 2022, AI has experienced both rapid development and a surge in popularity. According to OpenAI, the computing power of AI models doubles every 3.4 months. Amidst this swift evolution, countless industries and corporations have been rushing to implement this quickly changing technology. The global market for generative AI is predicted to reach $1.3 trillion in under 10 years according to a projection from Bloomberg Intelligence. In the midst of these rapid changes, there has been much public discourse about the safety of AI models. On September 29th, California Governor Gavin Newsom vetoed Senate Bill No. 1047, which would have implemented safety regulations on the development of large AI models and created a state entity charged with monitoring the development of future models.

Breaking Down the Bill

State Senator Scott Wiener’s Senate Bill No. 1047, also known as the “Safe and Secure Innovation for Frontier Artificial Intelligence Models Act,” starts by asserting that: “If not properly subject to human controls, future development in artificial intelligence may also have the potential to be used to create novel threats to public safety and security.” Some of the possible threats, the bill suggests, include “biological, chemical, and nuclear weapons, as well as weapons with cyber-offensive capabilities.” To address these concerns, the bill seeks to establish the Board of Frontier Models, a government body tasked with monitoring the development of large AI models. The bill requires developers to implement certain safety measures prior to training large AI models. Some of the required actions include: preventing unauthorized access to, misuse of, or unsafe modifications to the model, including the capability to quickly shut down the entire model; the implementation of a written safety and security protocol; and an annual review by a third-party auditor.

Support and Opposition

While a majority of Californians supported the bill according to a poll from the Artificial Intelligence Policy Institute (AIPI), SB-1047 faced stringent opposition from Silicon Valley and major players in the tech industry, who believe that such regulations will hamper the development of AI. In his statement about the bill, Newsom acknowledged the importance of safety and concerns, though also voiced his opposition of the bill. He argues that the bill would not be effective because it only focuses on models based on their size, rather than “tak[ing] into account whether an AI system is deployed in high-risk environments.” The bill targets large models that cost more than $100 million to train, or that require more than a certain amount of computing power. Newsom points out that smaller models are not necessarily safer, since small and medium-sized AI models often deal with sensitive data as well: “By focusing only on the most expensive and large-scale models, SB 1047 establishes a regulatory framework that could give the public a false sense of security about controlling this fast-moving technology.”

Going Forward

The safety of AI, and the balancing of its risks and rewards is, and will likely remain, a topic of much debate. As bills like SB-1047 show, the world is in the middle of figuring out what to do with this most recent technological revolution, as well as attempting to strike a balance between an appropriate level of regulation and maintaining what Newsom describes as a “free-spirited cultivation of intellectual freedom.”

Contact Sheamus at sheamus.finnegan@student.shu.edu